I was at Ludwig Maximilian University in April, working with a research group in Media Informatics and Human Computer Interaction (http://www.medien.ifi.lmu.de/index.xhtml.en), trying to give non-anthropomorphic robots their own way of expressing themselves. The work was done in the Mixed Fleet project in WP 1 (human-centered design in Mixed Fleet context).

What are non-anthropomorphic robots? These robots were engineered to be non-human like, for example, Sale Robot. That’s right, no cute little faces or waving hands. These robots were designed to look nothing like us. But who says they can’t still “talk” in their own way? We used lights, sounds, and arm movements to make them expressive.

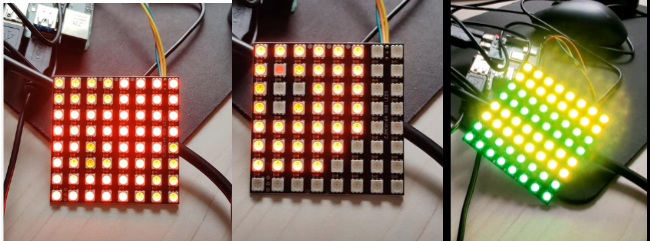

Lights, Camera, Robot!

We used an LED matrix and a Raspberry Pi to create different light patterns. Think of it like robot mood lighting. Green lights for happy, red for trouble, and rainbow for… fun!

Arm Gestures with Pymycobot

Next, we used a robotic arm called Pymycobot from Elephant Robotics. This little guy could wave, point, and move in ways that help show what the robot is “thinking” or “feeling.” It was like the robot’s way of using sign language.

Sounds: The Final Touch

We also used sounds to add another layer of expression. Cheerful beeps for when things were going well, warning buzzes for problems, and calming hums to keep things chill.

What’s Next? The Grand Online Study!

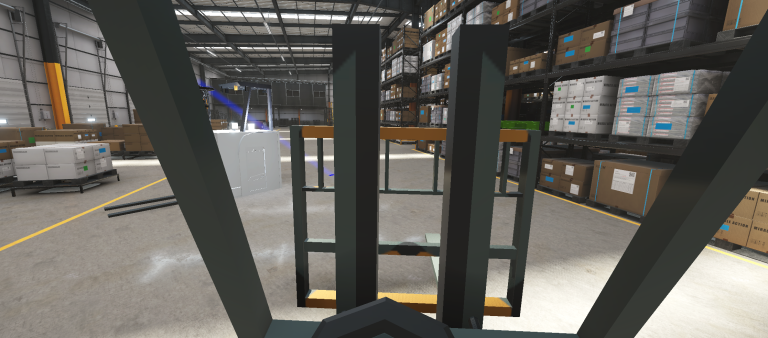

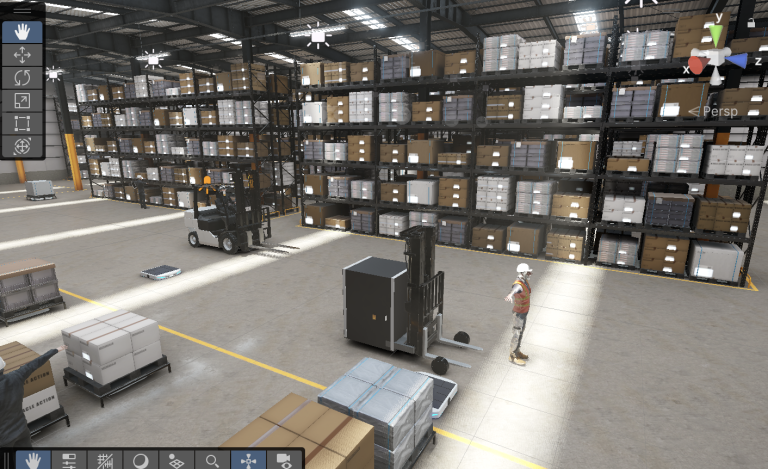

Currently, we are gearing up to run an online study to see how people react to these robots. Imagine a robot in a hospital, navigating around with its lights, sounds, and arm gestures. How will people understand and interact with it? How would the human staff react? Would they understand the robot’s “emotions” and commands? That’s what we want to find out.

The Big Reveal in August

I might be heading back to Germany in August to dive deeper into this research. Our plan is to use what we learned from the online study to see how people really understand and work with these expressive robots in real-life situations. In addition, we are planning to do something novel! Something like Sci-Fi movies, which we will keep a secret for now. Hint! We will try to integrate the latest tech hype!

So, stay tuned for more updates from my robot adventures. Who knows, maybe one day, we’ll have robots everywhere, showing us their feelings with lights, sounds, and cool arm moves. Cheers! Prost!🍻

Written by Aparajita Chowdhury