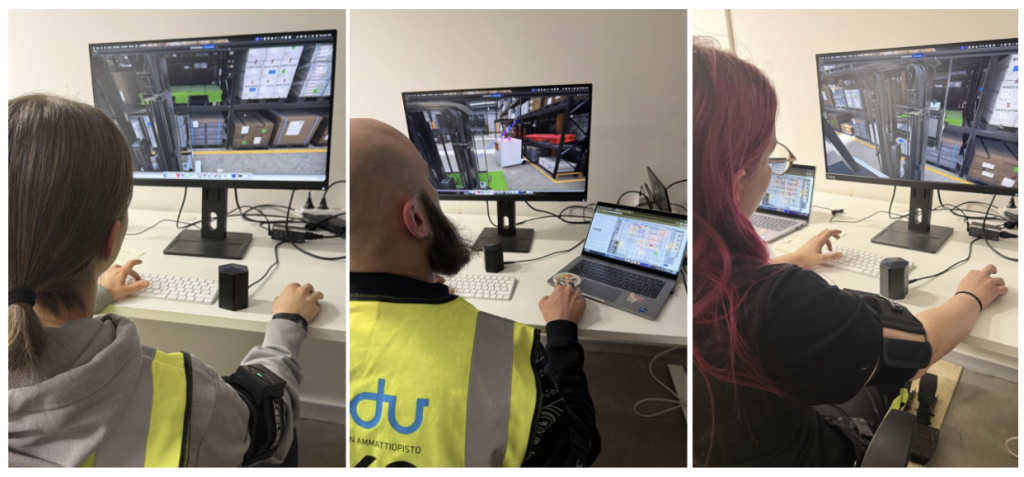

To start, participants wore two haptic sleeves, one on the left arm and one on the right. Whenever an AGV approached from either side at a crossroads, the corresponding sleeve gently vibrated. The idea was to create a quick, instinctive cue that didn’t rely on reading signals or scanning the environment. And honestly, this part worked better than expected. Many students said the vibrations lowered their cognitive load because they didn’t have to constantly keep track of where AGVs might come from. A single tap on the arm was enough to tell them something was happening, and they reacted faster and with more confidence.

We also tested an AromaShooter to introduce the AGV’s “presence” in a different way. Instead of sounds or flashing lights, a small burst of scent, lavender, peach, or ocean, was meant to signal that an AGV was nearby or performing a task. This one didn’t land quite as well. Most participants didn’t notice the scent at all, or only realized it after we told them to pay attention. It was still an interesting experiment, but clearly smell is not the strongest direction for AGV communication… at least not in a busy warehouse scenario.

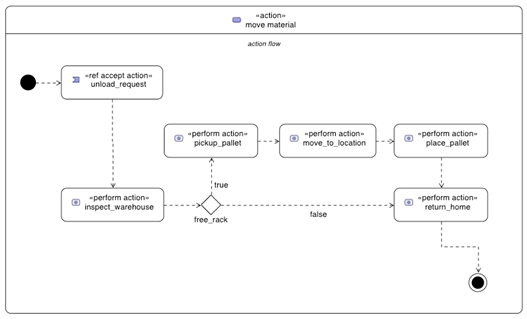

The third piece of the setup was a 2D interactive warehouse map. It showed the participant’s location, the AGVs’ positions, possible errors occurring in the system, and even suggested optimal routes. Reactions to the map were mixed, some loved having an overview that helped them plan their movement and understand the bigger system. Others felt it added more information than they needed and preferred relying on visual cues inside the simulation itself. This contrast is already giving us great insights into different user preferences and what types of information future workers actually find helpful.

Overall, it was a genuinely interesting day full of data collection, problem-solving, and plenty of “oh that’s fascinating” moments from both participants and us. Every round at TREDU brings us closer to understanding how humans and AGVs can share space more smoothly, and this multisensory version added a whole new layer to the story.

More about analysis is coming, but for now: huge thanks to the students who tested everything with such enthusiasm. On to the next iteration!

A big thanks to ScentTech Laboratory and the MultiModal Interaction Research Group, for lending us the scent and haptic devices. And of course, thank you to every participant who stepped into our mixed fleet world and shared their insights with us.

Written by Veronika & Apa