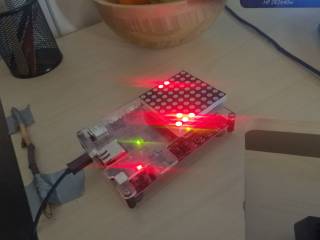

What’s special about the PYNQ-Z1 System-on-Chip (SoC) is the field-programmable gate array (FPGA) that allows custom hardware to be programmed into the FPGA fabric. This allows the PYNQ to emulate various kinds of real-world systems with heterogeneous, specialized hardware.

C on PYNQ-Z1

To experiment with the build system, we used a demo game project from the course Introduction to Embedded Systems. A Vivado and C implementation was kindly provided to me by Matti Rasinen. The game is an adapted example project that is very easy to compile and run on the device due to automatic configurations provided by the SDK. However, transforming a managed Vivado, Xilinx SDK, and GCC C-compiled project into a cross-compiled Rust project requires getting an extensive set of symbols and memory addresses configured just right. The first step was understanding how Vivado produces the hardware.

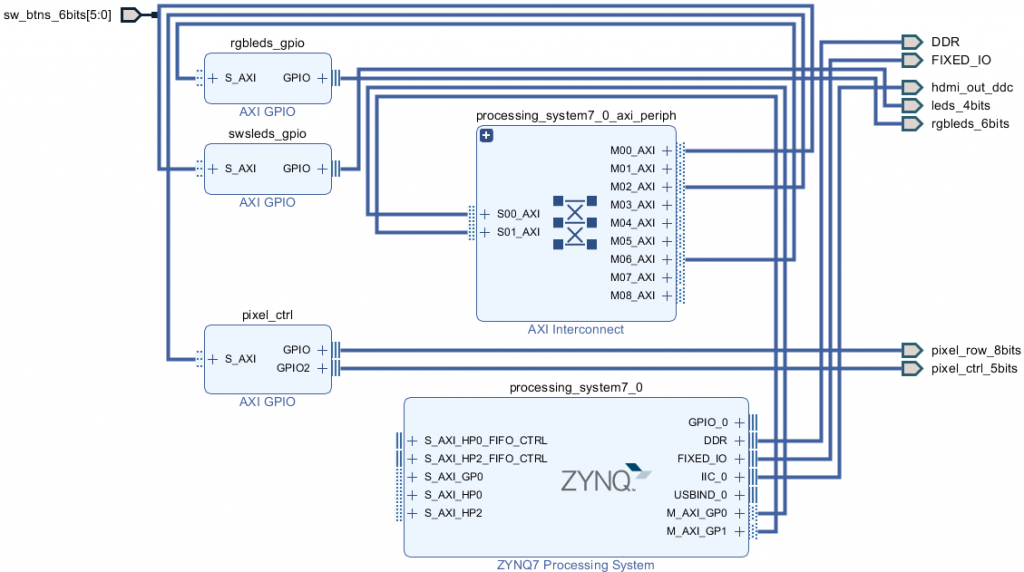

Opening up Vivado Design Suite shows a design and automation interface that lets you connect abstract wires between logical devices. Running the tool through its workflow makes it produce a bitstream file (.bit) that allows to program the completed design into the physical hardware. Because the logical hardware might contain arcane sub-devices, the tool also needs to generate a list of drivers and libraries to allow a host-processor to call into their behavior. Finally, this list of software drivers and libraries is made into a C-library, “libxil”, in a directory suffixed with “_bsp/”, which stands for Board Support Package.

Once the hardware definition files were created, we could move onto Xilinx SDK to program and deploy an application. Running the C-application using the interface is great and easy: a template project provides a main.c file which gets linked into the previously generated driver library (libxil.a). Finally, a cross-compilation toolchain can be used to produce a compatible binary executable that gets uploaded onto the ARM Cortex-A9 processor on PYNQ via xsct, the Xilinx Command Line Tool.

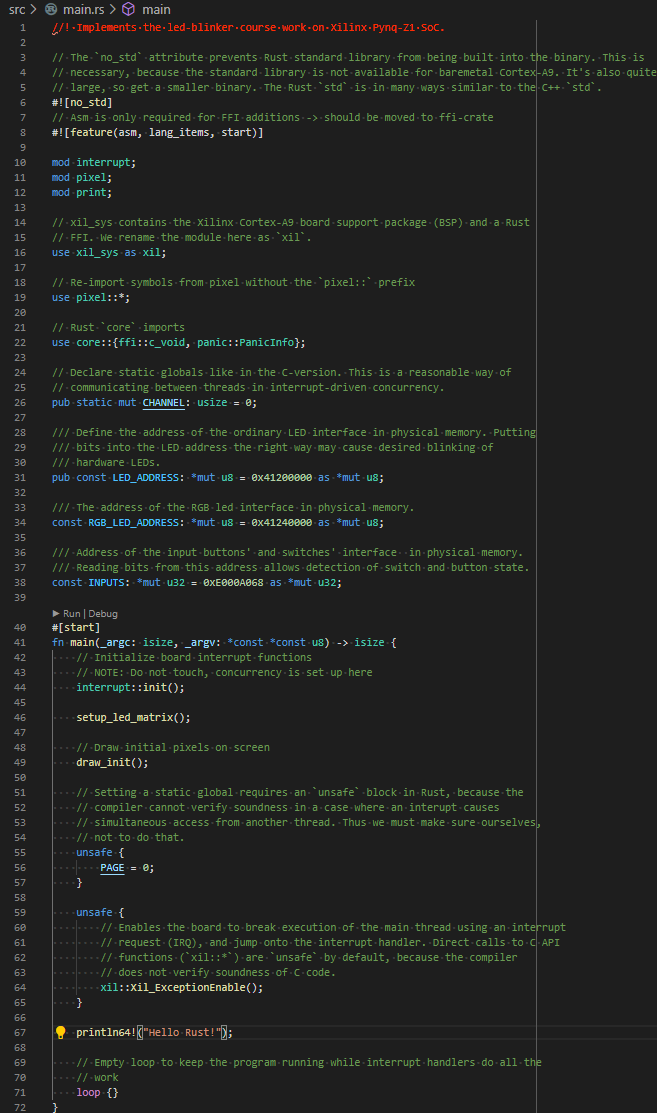

To deploy Rust in place of C, we’d need to build a correctly configured binary executable with the required drivers from Xilinx tools.

Adapting Workflow

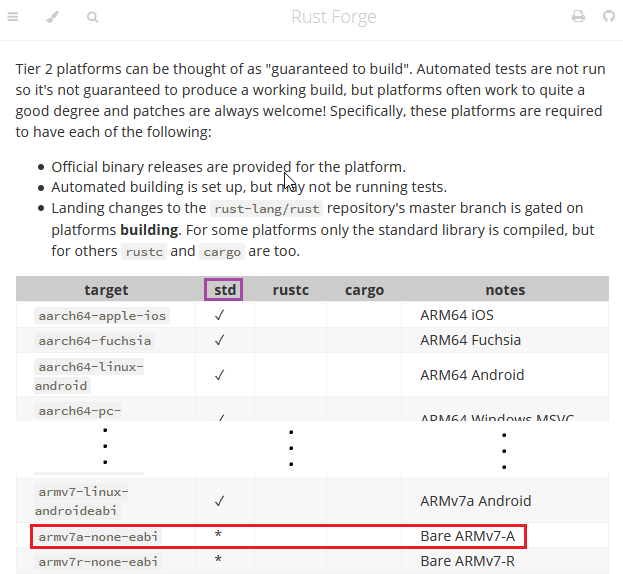

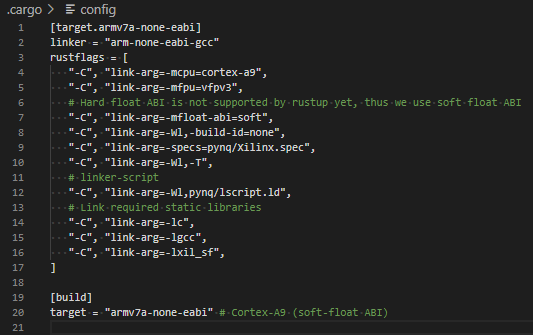

To start off on our Rust-venture, we’d need at least a compatible cross-compiler toolchain. Knowing that Cortex-A9 is an ARMv7-A architecture, I found on the Rust Platform Support website that partial support for bare metal ARMv7-A type targets is indeed available, added by Github user japaric only as recently as January 2020. Their target triples1 are armv7a-none-eabi and armv7a-none-eabihf.

Of these two, only armv7a-none-eabi seemed to have proper support via rustup, the Rust toolchain manager, so I chose the path of least resistance to stay productive. This meant forgoing hardware floating point support.

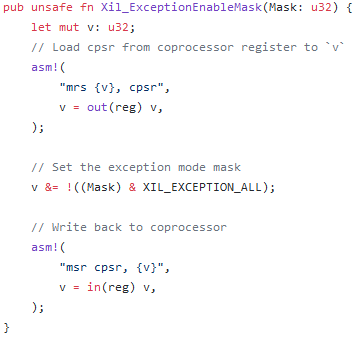

Having verified that the cross-compiler worked, we needed some way to access the drivers and board support (BSP) in the libxil.a static library. While we can trivially link the static library from Rust, we’d also need a way to let the Rust code to use it. To access the C-symbols in the library, we used a tool called bindgen to generate a thin Rust API based on the C-headers. Unfortunately, a drawback of this approach was that while I could generate most of the symbols provided in the C-headers of the libxil library, this did not include functional macros. This is because the C functional macros cannot be interpreted in the context-free environment of bindgen, since the behavior of a functional C-macro is dependent on from where it was called and type resolution cannot be performed. However, since it was already possible to compile our test application, we could generate a list of missing symbols and use the library source code to recreate the missing functionality using Rust and Assembly.

Later, after a sufficient amount of mimicking the Xilinx SDK generated makefiles for linker settings, we successfully cross-compiled the project. Jan Solanti helped out immensely with debugging linker issues.

And here’s some relevant no_std Rust source code…

The compiled binary could be easily injected into the Xilinx SDK workflow using xsct and substituting the Rust binary for the C binary in the final step of uploading the binary onto the processor. Here’s a link to a video of the project being run on PYNQ-Z1.

Implications

Being able to deploy Rust on the FPGA-enabled Cortex-A9 of PYNQ-Z1 opens up quite a few possibilities. The most obvious outcome is that we can provide a drop-in replacement for the course project on COMP.CE.100 Introduction to Embedded Systems for students that wish to explore the possibility of writing embedded software in Rust. We also provide straightforward, step-by-step instructions to compile Rust on PYNQ-Z1 for anyone who’s interested in using Rust on an FPGA platform. This enables them to take advantage of Rust’s features to optimize their working speed, leveraging software and hardware libraries at a higher level of abstraction. The approach resembles Python but without the overhead of the operating system and the Python interpreter, and with the possibility of going down to the register-access level if necessary.

In terms of real systems work, the static analysis of the Rust compiler might allow user space applications to be brought to bare metal with minimal difficulty and without fear of memory bugs. Implementing a driver in Rust would allow for experimenting with affine types to create drivers with safe and convenient low-level APIs, like zero-setup / zero-tear-down drivers. In the bigger picture, using Rust could expand the horizon of what is considered reasonable scale for an “embedded project” by allowing access to a large ecosystem of libraries. Perhaps this open interchange between hardware and software libraries would promote access to rapid hardware development. As an AI researcher, I am particularly excited about the possibility of creating a hybrid hardware-software backend for a maths / AI acceleration library such as ndarray or rust-autograd.

Read more on COMP.CE.100 Johdatus sulautettuihin järjestelmiin (in finnish), my take on Rust: productivity for resource-constrained implementations, or The Rust Programming Language book (link: my favorite page of the book). Also, for more bare metal hacking I’m teaching COMP.530-01 Bare Metal Rust later in autumn.

1 The target triple armv7a-none-eabihf comprises three parts: armv7a identifies the processor architecture, none tells us that we’re working without an operating system, eabi means embedded application binary interface (EABI), and hf (hard-float) signifies that we’re using an on-chip floating point unit.

Comments